GSoC 2024 call for project ideas!

Google Summer of Code is a global, online program focused on bringing new contributors into open source software development. GSoC Contributors work with an open source organization on a 12+ week programming project under the guidance of mentors.

As of today, applications for organisations are open (not yet for contributors). I expect projects like BRL-CAD et all, IfcOpenShell / BlenderBIM Add-on, FreeCAD, Blender, GIMP, OpenStreetMap, OSGeo, CGAL, who all joined in last year to potentially also be available this year.

Here is the list of ideas of projects for IfcOpenShell / BlenderBIM Add-on for 2024: https://github.com/opencax/GSoC/issues?q=is:issue+is:open+label:"Project:+IfcOpenShell"

Note that these are just ideas. You can choose from the list of ideas, or come up with your own idea! Anybody who is new to contributing to open source can join! Do you have an idea? Now is a good time to add it!

FreeCAD also has a list of ideas: https://wiki.freecad.org/Google_Summer_of_Code_2023 - if you are a FreeCAD user or dev and have an idea, now is a great time to add it (and start a new page for 2024 potentially).

Comments

Before posting something silly or redundant on IfcOpenShell / BlenderBIM Add-on for 2024 GitHub I wanted to run it by the smart people here on the forum. My idea is this: Paper to IFC maker

Outline

There is a mountain of ‘paper plans’ of existing buildings around the world, energy analysis, carbon assessment and maintenance forecasting are just a few areas where a portfolio of IFCs would be helpful. I propose turning these paper plans into IFC with the minimum of human intervention.

With the use of AI, the paper plan can be analyzed; walls, windows, doors, stairs etc identified through their typical graphical representation and a suitable IFC class/representation substituted in a new IFC file in the BlenderBIM Addon.

Human intervention will likely be required to establish/confirm drawing scale and may include some written notes, symbols, room names or window and door numbers for example. This could be read by the AI and used to define spaces and name IFC components. Window and door numbers could even be used to pick objects from an IFC openings library.

_Details _

Training the AI would include an ability to train for new drawing styles, this could be done through adding snips of graphical representations of elements such as walls, windows, doors, stairs etc to a reference library. Default settings like wall thickness and ceiling heights can be set. The AI would return a ‘confidence’ assessment of its work so a human can check and confirm or correct any mistakes. This stage would also feed back into AI learning. Drawing styles can be saved separately for future use.

Expected Outcome

The process of converting a paper plan to a usable IFC would be simplified and quick.

Human intervention would be coaching and confirming through knowledge and experience, reducing tedious modelling.

This would leverage the BlenderBIM addon to create IFCs.

Measurement and analysis of existing buildings would be greatly enhanced through digitizing.

_Other Possibilities _

The Paper to IFC maker could also be used to turn traditionally hand drafted concept designs into IFCs allowing a person with no BIM skills to participate in BIM. This would leverage the skills of often older, highly skilled designers to efficiently be involved more directly in BIM projects.

@Nigel

I love your idea! i spent so many hours modelling existing buildings from paper plans, that, at that time, i would have loved to have a tool like this.

Let me share some thoughts about this (that i hope are not too negative)

I wonder if there is an initial set of validated dataset available to train a AI model on ? some kind of paper2ifc association set that in my sense is needed if we hope to train an AI model. Or may be you think AI has to (may be) focus on elements ( something like "yes this line comes from a wall not a slab boundary, nor a leader arrow"). I've seen so many different kinds of drawings that i wonder if AI could really deal efficiently with this kind of stuff. (May be reduce the scope to first analyse vectorized 2D plans would be a good first step, i don't know... )

That said, I could imagine a UI feature that guide the user to help human by AI proposing value for a portion of drawing, and train AI with the help of human validating these portions of the plan and its IFC modelisation with the human look. A bit like autocompletion does in many tool we daily use. In practice i have usually modeled from plans and photos, not only plans.

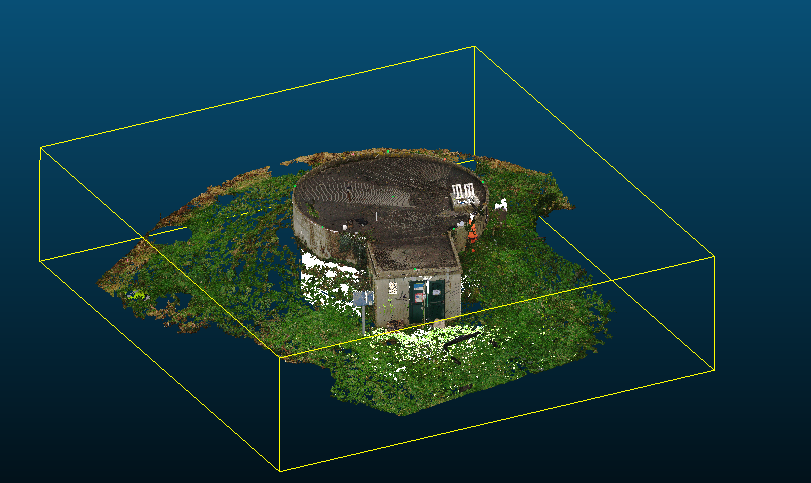

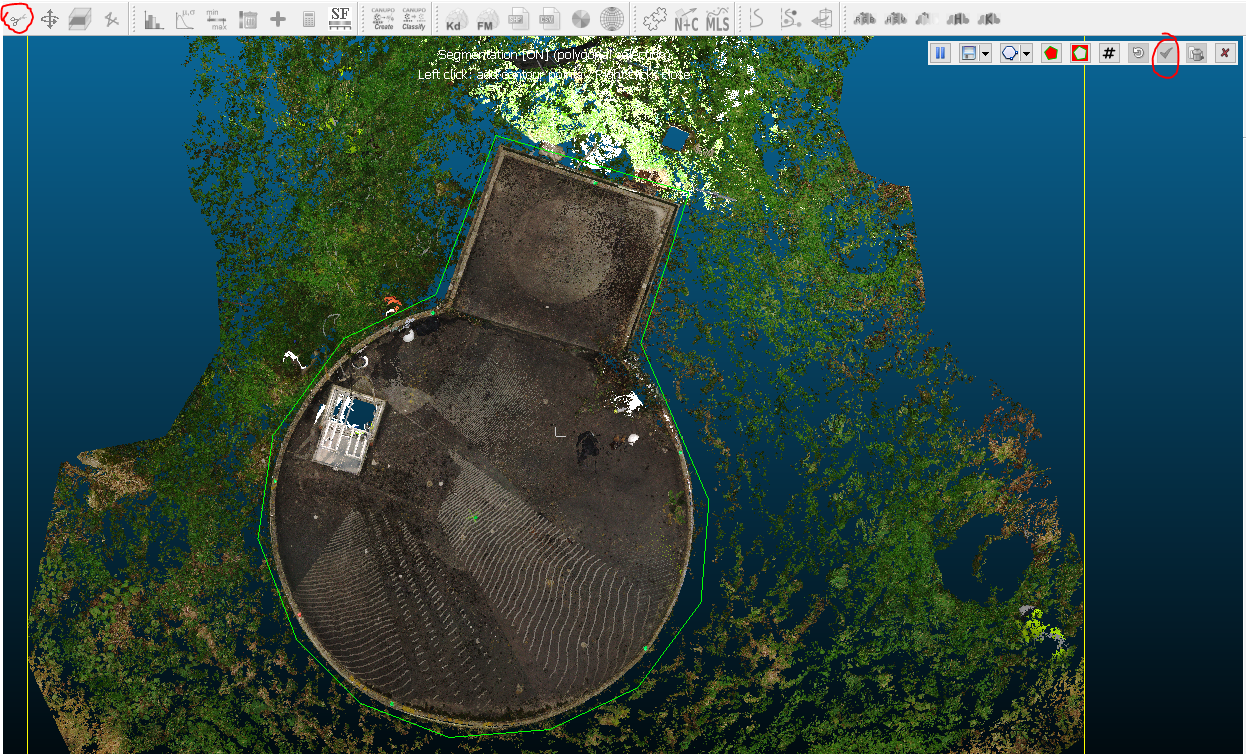

Finally, if the purpose is to represent reality, i wonder if the best effort to be made is not to put AI effort on the analysis of 3D scan to create 3D ifc models. 3DScan seems to be really cheaper and simpler to do today. (A friend told me last month that he as a lidar in is iphone !).

Plans are good, but sometimes they are not correct, they are old, come from different period of time, different architects and i don't expect AI to be really able to deal with that complexity.

I might be wrong... i'd love to be wrong ... please prove me wrong !

Wish you a good day

Last summer, I visited a conference where young people presented their findings on this topic, paper2ifc:

Method for generating multimodal synthetic data from parametric BIM models for use in AI systems:

https://hss-opus.ub.ruhr-uni-bochum.de/opus4/frontdoor/index/index/searchtype/collection/id/17165/start/7/rows/10/facetNumber_subject/all/docId/10130

Floorplan2IFC: Transformation of building floor plans into hierarchical IFC-graphs

https://hss-opus.ub.ruhr-uni-bochum.de/opus4/frontdoor/index/index/searchtype/collection/id/17165/start/15/rows/10/facetNumber_subject/all/docId/10122

Towards the rule-based synthesis of realistic floor plan images

https://hss-opus.ub.ruhr-uni-bochum.de/opus4/frontdoor/index/index/searchtype/collection/id/17165/start/48/rows/10/facetNumber_subject/all/docId/10089

Towards automated digital building model generation from floorplans and on-site images

https://hss-opus.ub.ruhr-uni-bochum.de/opus4/frontdoor/index/index/searchtype/collection/id/17165/start/5/rows/10/facetNumber_subject/all/docId/10132

And all other papers can be found here:

https://hss-opus.ub.ruhr-uni-bochum.de/opus4/solrsearch/index/search/searchtype/collection/id/17165/start/50/rows/10/facetNumber_subject/all

This is not AECO specific but does speak to the idea of manual mark-ups and AI interpretation and conversion to digital, cookies are important too! some silly online 'clickbait' headline I saw today said 'Two-thirds of Americans said AI could do their jobs' at that rate 6 billion of us worldwide will be looking for work

some silly online 'clickbait' headline I saw today said 'Two-thirds of Americans said AI could do their jobs' at that rate 6 billion of us worldwide will be looking for work

That's a really cool idea! GSoC ideas need to have a mentor, do you know anybody with AI/ML knowledge that would be able to mentor that aspect of it?

Not me I'm afraid, but I would love to be involved as tester, reviewer or whatever that role is called

Note that when applying for GSoC, you should never point to a list of issues as the ideas page. Using such a link will quite certainly lead to a rejection. If an application has already been made, I would suggest to prepare a proper page and request to edit the application.

Reference: Defining a Project (Ideas List) .

Hi, i thought further on @nigel proposal, and took a closer look to @Martin156131 papers, and more and more, i feel like working on this subject. I don't know if it's GsoC compatible, but here is the thing:

I am actually following a course on Datascience and ML at https://datascientest.com/ in Paris.

It's sort of a Master degree in Data analysis- data science, and Machine learning processes, a bit of MLOPs as well.

In the 2 or 3 next weeks, i have to pick a ML project to work on, what they call the "Projet fil rouge" and this is a important part of what i have to achieve to validate my degree.

There is a list to choose from, but i also have the opportunity to propose my own subject, if it fills the requirements needed.

The project ends in october, and is mainly cut in 3 parts.

1. Data analysis Data visualisation - data cleaning - data preprocessing

2. Data modeling - training data with regression models and/or neural networks on the data to make predictions -

3. Presenting results on a interactive web app like streamlit or equivalent

For this proposal to be accepted by my mentor, i first need to prove i am able to access sufficient training data for the model and that the subject is relevant. For the data part, in my understanding, i would need paper plans, sections, elevations, and their corresponding ifc as "training data".

It doesn't have to be perfect data, I'll be judged on the ability to handle the ML process more than on the results (But sure good results would be more satisfying)

That is why i ask you, dear community, if you know where i could find that kind of data? open data ? If it's a concern, i'm ready to sign any NDA, if needed (and i guess my mentors will accept as well ).

If you have any other insight to share with me, please do. I would be delighted to combine my ML training journey on a subject i like (BIM) rather than predicting who might buy a new car next month.

Thanks for your help.

I'd like a lot something about scan2ifc

You're free to use any pdfs in any of the repos under the following. All open source.

Obviously there's just one 'drawing style' represented on most of the pdfs, but maybe a good start for training Skynet. :)

thanks @theoryshaw, didn't know about these, i'll look into it tomorrow.

NP. Some of the most recent repos have PDF's from BB/IFC files. I'm no AI engineer, but I wonder if there's any advantage of training the AI by comparing both files together?

Also, i'm no software engineer, but my gut feels like you might use the following as a foundation...

https://github.com/opensourceBIM/voxelization_toolkit

The first paper I linked to is about how difficult it is in the construction industry to get large data sets to train an AI model. Even if you have these large data sets, for example PDF plans, you still have to “label” them, and doing this manually is too time-consuming. The solution chosen here is to use a script to create 2D PDF plans from a randomly generated 3D BIM model and to use the 3D BIM model to “label” them correctly using the script. This allows any number of synthetic, “labelled” data to be generated that are relevant for training an AI model. This was done in that Paper with the Revit API, I will find it exciting to see if you can create such “labelled” data with the same approach but with the BlenderBIM API.

@Martin156131, i confess i didn't read the paper fully, if i remember well, it's german, and my german is quite poor (guilty!)

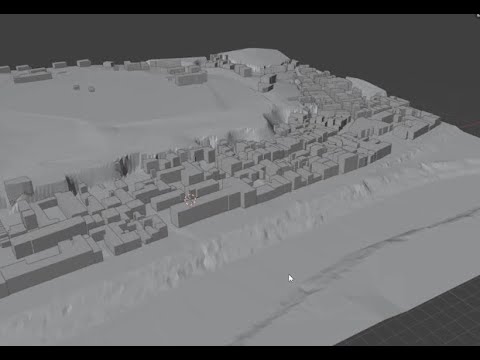

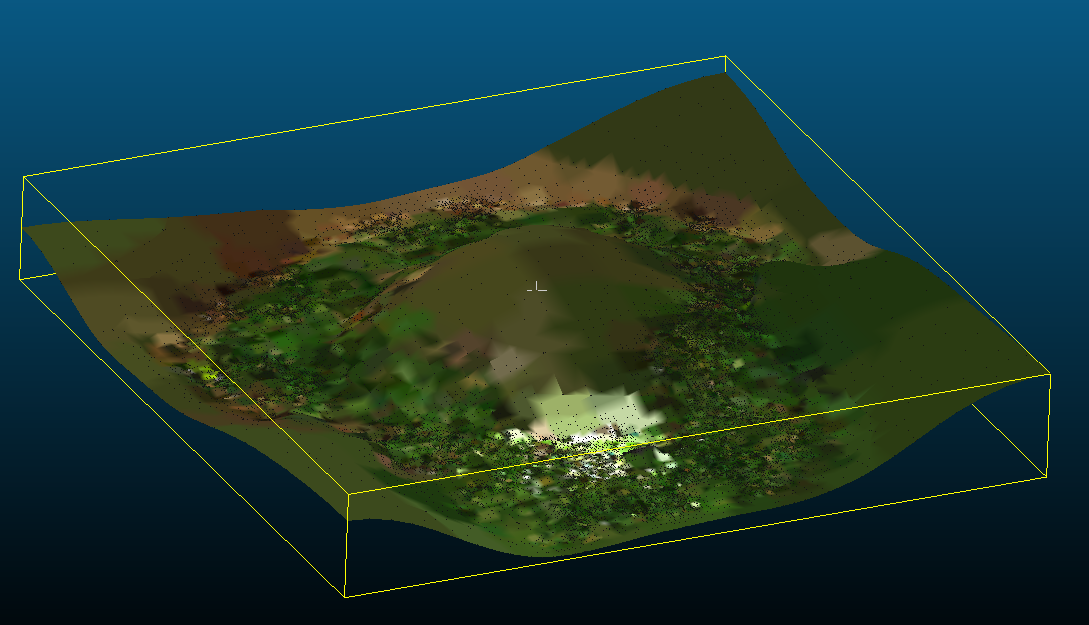

I had a meeting this morning with my mentor, and unfortunately, we both agreed the case study seems too complex to handle at my level of expertise. I will have to find something a bit less ambitious to deal with. So i'm now thinking about detecting and setting up roof shapes from satellite photos to push a bit further the process of setting up 3D environnement contexts i use, as i show in the video below.

I have found papers like https://www.researchgate.net/publication/328972501_Roof_Shape_Classification_from_LiDAR_and_Satellite_Image_Data_Fusion_Using_Supervised_Learning but don't really know at what stage it is.

Do you think it might be an idea of value?

I 2nd this, as well.

So you are abandoning this? I managed to get some promising contacts of the ML experts at the Prague CVUT and could try to find somebody there to mentor that part.

@JanF. Guess you are talking to me ? If yes, i would say, no i'm not abandoning it fully yet, i'm still interested to work on the subject, and any if your help is very nice. What i am sure of is that i will not be able to make it the "Projet Fil rouge" for my degree. I'm on the first months of my ML journey and starting with this might be a bit too difficult for me to handle. I agreed with my teacher with that.

However, for a starting tip, he advised me to use IFC model to create plans and use these pairs Plan/IFC as "good data" we can feed the model with. This before trying to go anywhere further. Think it is a good idea to start with.

He also pointed this YOLO stuff out for me (see video below)- it's quite impressive. i wonder if this can be used for Arch purposes .

For my "Projet Fil Rouge", my fall back plan is to try out a simpler case wich would be analysing satellite photos to guess roof shapes. as i said earlier.

I like it too and without any deep thoughts i feel it might be more accessible to me than paper2ifc at first glance.

But same question. To train model, we would need training data and opendata of scan3D with corresponding ifc model might be difficult to find... I don't know.

Some of the latest project repos https://hub.openingdesign.com/OpeningDesign we modeled up the existing conditions from 3d scans done by an iphone 14, using Polycam app. Might help.

The .glb scans are usually in the following path

...Open\Existing Conditions and Code Research\Existing Conditions\ScansOne big problem also with 3D scans is the huge disk point clouds takes. And even if you manage to produce a 3D mesh out of it, it is usually millions upon millions of polygons which are very hard to retopologize correctly. I don't know if it can really be used to train current generation ML algorithms. Today I think a realistic processing of 3D scans is segmentation, Florent Poux who is active on the subject on Linkedin shared much ressources on the subject.

I never managed to deep dive into it but it seems promising. However it's still reserved for developers or programmers, as the workflow necessitates building your own coding environment and processing everything yourself. Maybe in the future it will be released to a more general audience, but for now it's still very much experimental (IMHO).

@Gorgious, i have discovered Dr Poux videos last sunday while i was looking around for hints about my subject. It is indeed very promissing.

I asked him about a workflow using LIDAR data to vectorise low poly meshes of geographic context and he seems interested to do a video on the subject.

Concerning Buildings, his point cloud videos are on my personal todo list, i guess have a lot to learn from that. He seems to have developped techniques that might be used to detect objects in a point cloud scene.

It's really cool .

Yes, usually this is the case, except this is a specially created ideas GSoC list (not a bugtracker, even though it does use the Github issues system) created by the umbrella organisation BRL-CAD that has been part of GSoC for many years now. We don't apply directly, we are underneath the umbrella, so we follow the rules of BRL-CAD.

@bdamay have you considered partnering with a company where they can share their BIM models? (other than the very generous open data license from the OpeningDesign projects)

As for point clouds, given their heaviness I think people would generally get more return on investment on 360 photos assuming it's a job that 360 photos can also do like on-site verification.

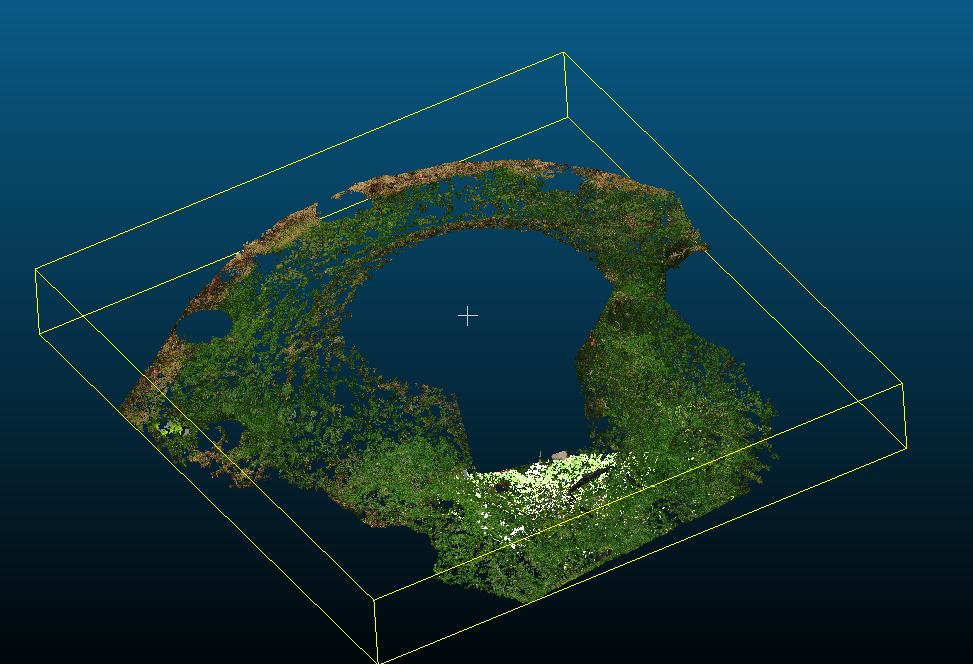

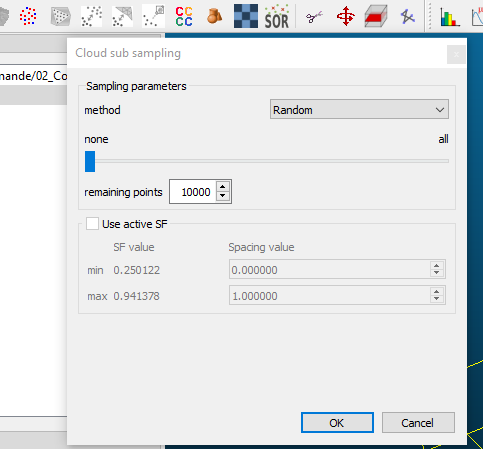

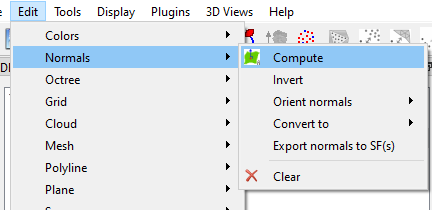

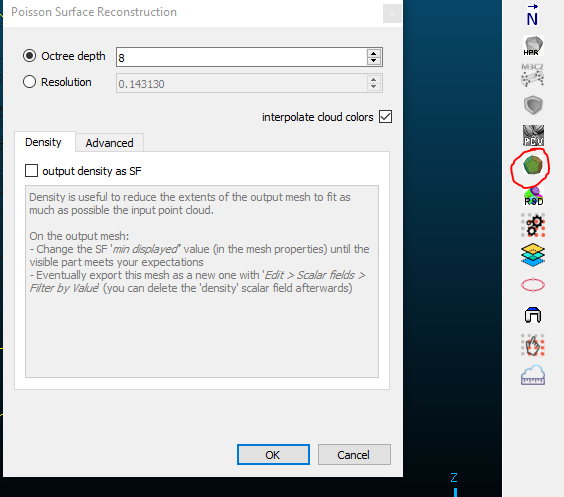

FWIW here's simple manual workflow if you want to mesh a low-poly terrain using cloudcompare.

Load in the point cloud

Segment out the buildings and non-terrain features

Cull most points with the subsampling tool

Compute the normals

Reconstruct the mesh with the poisson algorithm (higher octree means more polygons)

Here's your 3D mesh (don't use it as a topographical reference)

Yes, i have asked around but it seems difficult. Either they have point cloud but no corresponding models either it is model with no point cloud. I will keep asking but i'm putting this aside for now, i might have been to enthousiastic at first. I took also a look @theoryshaw OpenData, opened one or two glb points clouds and realized i didn't really know where to start.

So for now, considering my course project:

For GSoC, i would be interested to give a try as a contributor on the "Web-based UI integration with Blender" if this subject is still in.(I might give it a try even if it's not). But first i must get acquainted a bit more with BlenderBIM source code, i'm actually only on the" playing around with the demo module" part of it for now. But once i will have fully understood the logic, i'll give it a go.

Have a good day.

Seems like the following could be harnessed some how, as well, considering the above conversations.

https://github.com/gibbonCode/GIBBON

Hi @moult, i understand 6/02 was the deadline for GSoC list to be submitted, did you apply for a list? if yes, is it the one you mentionned on github ? If i'm correct, in my understanding GSoC is publishing the Official list on feb 21, and i am definitely willing to give a try on OS development with BlenderBIM.

Have a good day.

Benoit

Hey all! A little late but great news, we've been accepted for GSoC 2024!

There are 10 project ideas available here, or you can create your own: https://github.com/opencax/GSoC/issues?q=is:issue+is:open+label:"Project:+IfcOpenShell" . If you'd like to get involved the steps are here: https://opencax.github.io/gsoc_checklist.html and you can reach out at https://osarch.org/chat/

Here's a list of people who have reached out so far. Nobody has yet written any applications, no 1-on-1 discussions, and no patches written.

So then i'm not eligible, tough but fair... Let young fellows dive into it!

That's said i would really like to follow, participate to discussions, and may be help with some code on the blender web app subject.

I wonder if that is manageable without interfering too much in the Gsoc process ? I'll surely do some personal experiments sooner or later anyway...

Would be cool, for those applications that don't make the cut for GSoC, but are still intriguing to the OSArch community, maybe OSArch can fund their work, that is, if the applicant is still interested in pursuing. Maybe we could fund through this funding mechanism.