@Moult said:

This is really great stuff! How easy is it to convert Sverchok scripts into Python code that can be run as an add-on?

Pinging @nikitron for the answer, I still haven't gotten to looking at blender plugins creation unfortunately.

For me the biggest issue now is the lack of standard for multilayered walls and parametric door/window objects in blender, (I'm not sure what archipack does, but the pro version is not os anyway) if we agree on something in these areas we can start developing a plugin.

@Moult said:

This is really great stuff! How easy is it to convert Sverchok scripts into Python code that can be run as an add-on?

Pinging @nikitron for the answer, I still haven't gotten to looking at blender plugins creation unfortunately.

For me the biggest issue now is the lack of standard for multilayered walls and parametric door/window objects in blender, (I'm not sure what archipack does, but the pro version is not os anyway) if we agree on something in these areas we can start developing a plugin.

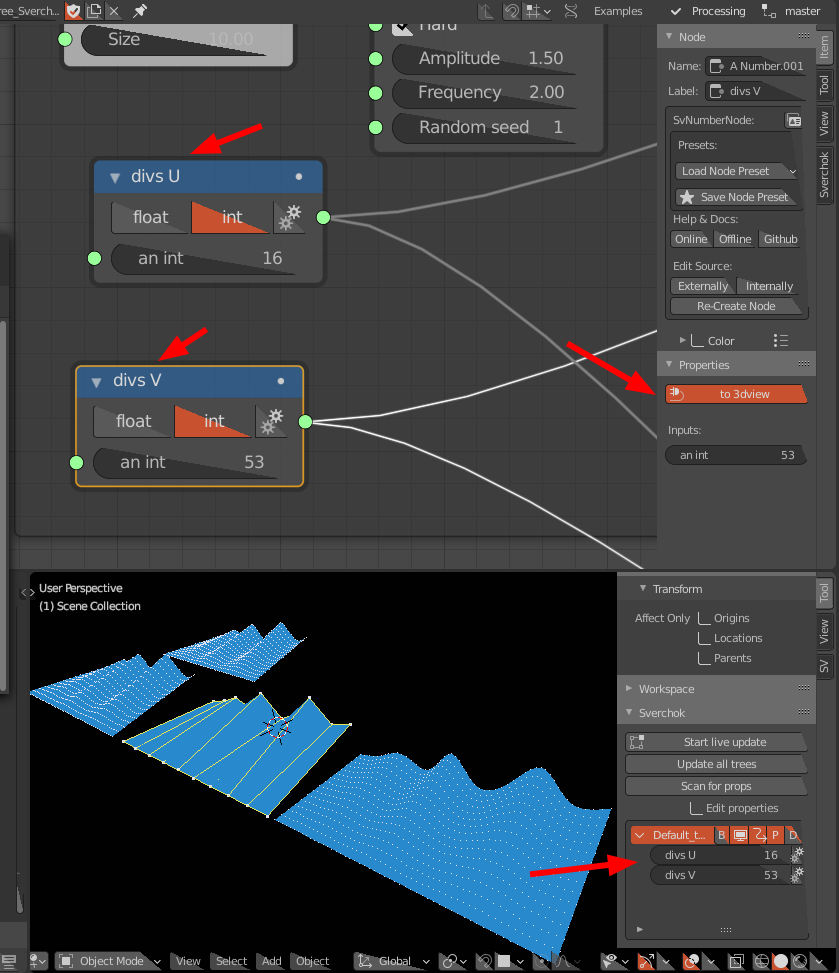

For now you can use sverchok as addon itself (node tree can be represented in 3d window panel). But it needed refactoring for scripts to go from tree. It could be possible anyway.

Next step in grease pencil sketching to model: Snapping to predefined axes (and generating volumes)

The user defines vectors of his axis directions, the reference lines find the closest one (angularly) and each wall snap to the direction assigned to it.

My current concept is actually to create a set of "modules" (if you are familiar with Darktable that kind of modules I mean) - each module acts independently and can be turned on or off (sort of like adjustment layers in Photoshop, now that I'm thinking about it), it doesn't change the original geometry, but takes it as a source and creates a modified copy, this way the whole thing stays completely parametric, the only data in the file is the original geometry, the modules used and their parameters.

Modules:

1. GP to lines - takes GP strokes and turns them into straight lines

2. Cleanup - takes straight lines and searches for lines with similar direction and colliding ends to turn them into a single line

3. Axes recognition - takes straight lines and turns them into straight lines with an appropriate axis as a parameter

4. Axes snapping - takes straight lines with axis parameter and turns them into lines parallel to one of the axes

5. Connections recognition - takes lines and turns them into lines with lines they are connected to as parameters

6. Trimming - takes lines and trims the ends that go over connected lines

7. Dimensioning - takes lines and moves them according to the dimension input

8. Connection keeper - takes lines with connected lines as parameters and extends/shortens them to keep the connections

9. adding openings - takes two sets of lines and creates objects on their intersections

As of now I covered all but 2, 7 and 8 in my demos, points 2 and 8 should be easy, 7 should be in principle also easy, but I have no idea how to make an UI for it.

Thoughts?

Btw as soon as I figure out how to link the objects to collections properly and create qto sets (as I mentioned here ) it will be also possible to autogenerate room tags for the volumes and export them directly as ifc volumes and 2D diagrams.

Hi everybody, I think this feature can have great potential. But I wanted to share my consideration: in my experience it is necessary to draw by hand when I am designing a certain product when I need to think about how that thing should be. When I do this on paper, one thing that helps me a lot is the scale of representation. Each scale involves a certain degree of detail which helps to clarify. Furthermore, by drawing in scale you acquire automatisms that allow you to correctly represent what you have in mind. So I ask you would it be possible in the future to implement a function that allows you to view objects in the correct scale in the monitor? maybe even being able to lock it. For example: in an urban project I would be able to visually quantify the size of a certain portion of the lot (intuitively without use tools) and I could position elements such as trees of different sizes simply by sketch. It would also be very useful to just be able to superimpose gracepensil on the scale model. thanks to all of you for this space

Yes, this is important. I am not yet sure what the correct approach should be, as the most digital painting hardware is rather small and so it is usually necessary to pan/zoom a lot, the most fluent way I have achieved is using the left hand to pan/pitch zoom and the right one to sketch.

Anyway, it is kind of possible already in Blender, the default grid is metric and you can precisely control your zoom using the "N" panel's focal length for an orthographic view camera, so in my case I can set the focal length to 65,7mm and the grid becomes about 1cm on my display, so that makes it 1:100. (I found that out by measuring the grid with tape measure on my display, ehm)

We definitely need a user friendly way to do set this up and, as you said, perhaps also to lock the scale, a shortcut to switch the scales and so on. This all should not be too difficult to do with a plugin I think, except for getting the real world pixel size automatically, here I am a bit concerned, since my 100% in Photoshop or Corel were always off and I had to set them manually (but Acrobat reader gets it right so it can't be that hard).

I would actually expect something like this to be way more common nowadays. One way we could od this already is to use the vectorization in Inkscape, in my experience it works quite well. We could of course build a AI solution as well, I'm considering doing that for handwritten notes recognition. But there always will be issues with AI and online solutions, as discussed here: https://community.osarch.org/discussion/210/is-there-a-hidden-machine-learning-ai-competition#latest

@JanF would you mind sharing your blend or json file for your latest Greasepencil BIM modelling sverchok setup? I would love to play around with it. Thank you!

@Sinasta Made a repo just to feel cool: https://github.com/JanFilipec/SketchyBIM

Let me know if you have any questions. One thing you are likely to experience, is that as you open the file and start adding strokes to an existing grease pencil layer the existing strokes disappear. I don't know why Blender does that, sorry.

These studies look really promising for quick sketches! Amazing!

My question is how easy is it to move a wall around afterwards? I saw that erasing the perpendicular strokes gets rid of doors and window, so curious how it works for walls.

Further on, it could be super cool to be able to draw some types of symbols inside of the space to add simple furniture representations - e.g. a small rectangle w/o crossing a wall ads a standard double bed (simple extrusion), a cross ads a chair, an oval a table, etc.

Will it be an overstatement to say Grease Pencil is the future?

Line art creation (not just rendering) now possible in Blender via Grease Pencil, and it will be in the main build, not an add-on, not a custom build of Blender. This should expose more things in the API that can be taken advantage of.

@JanF I think this further reinforces the possibilities of the sketch design workflow you've been experimenting with here with others. I've been thinking your Sverchok solution really needs to find convergence with @brunopostle's Homemaker + Topologise implementation in Blender.

Also, @Moult, @Andyrexic and @stephen_l, it seems this might be a worthy contender to solve the CAD documentation challenges we have with Blender. It will mean treating Grease Pencil like Layout is to Sketchup and Form Z Layout is to Form Z. MeasureItArch should be able to work on Grease Pencil objects on a dedicated layer.... yes, that's the beauty of the thing, Grease Pencil has layers, so a similar workflow to what people are used to in other tools can translate directly. Grease Pencil already has an edge because it is already so powerful for 2d sketching and illustration / digital painting type tasks (and of course 2D animation, the new interpolation feature when combined with the line art feature, for example would be very useful for creating assembly animation from 3d models), it will outclass other Layout solutions in other apps once keyed into the construction documentation (and design presentation) pipeline.

I can already see how Grease Pencil CAD layout templates can be created to store default settings, etc and maybe even customised to suit a BIM workflow. And that is where I see the potential magic in all this, not just creating interesting features, but developing a unique and seamless workflow from sketch design to massing and conceptual modelling, to construction documentation, all within Blender. Thanks you guys for doing all the hard work that keeps opening up all these possibilities.

Lines are "exportable" as dxf, svg, not certain of the state of grease pencil support in exporters at this time. However handling gp lines in svg export should not be an issue.

The point with documentation is more to find a way to provide vector based side views.

As using gp as draft, archipack "walls from curve" implement support for grease pencil.

I love the generate rooms approach. I think that's THE approach.

Generating rooms into a volume as you are addressing in top view, is exactly what we do when we sketch. When we sketch we don't define walls, but we do define boundaries of spaces, or we divide an overall shape into smaller spaces, or we draw some lines to extend current shapes...

That's why usual BIM software workflow is the wrong approach and sketching is the right approach for idea exploration. BIM focuses on walls and in every example people already know what to model. I think you we are still attached to that wall and window approach when we have so much more in architecture. If you stick to it you're just doing the same with a nicer UI.

Sketching focuses on splitting and connecting spaces and freedom of lines for representing anything. We expand ideas freely without thinking exactly what will it actually become in the end. It might be a wall, a screen or a line in the ground. Therefore BIM comes later as you design a wall, not an abstraction of a boundary that you don't know what it is yet. However, if the gap between BIM and sketching is not there then that would be great.

Your plan sketch and splitting into spaces is, therefore, more inline with the root of how we think, imho.

However, grease pencil on SketchyBIM should quickly dettach itself from an exclusively plan sketching workflow and think in the broader scope as soon as possible.

Having said that, this particular experiment you have, could be adapted to the whole process. What you have done here is the answer for a lot of things. Actually, for me, this is the starting point for a full architectural workflow using grease pencil.

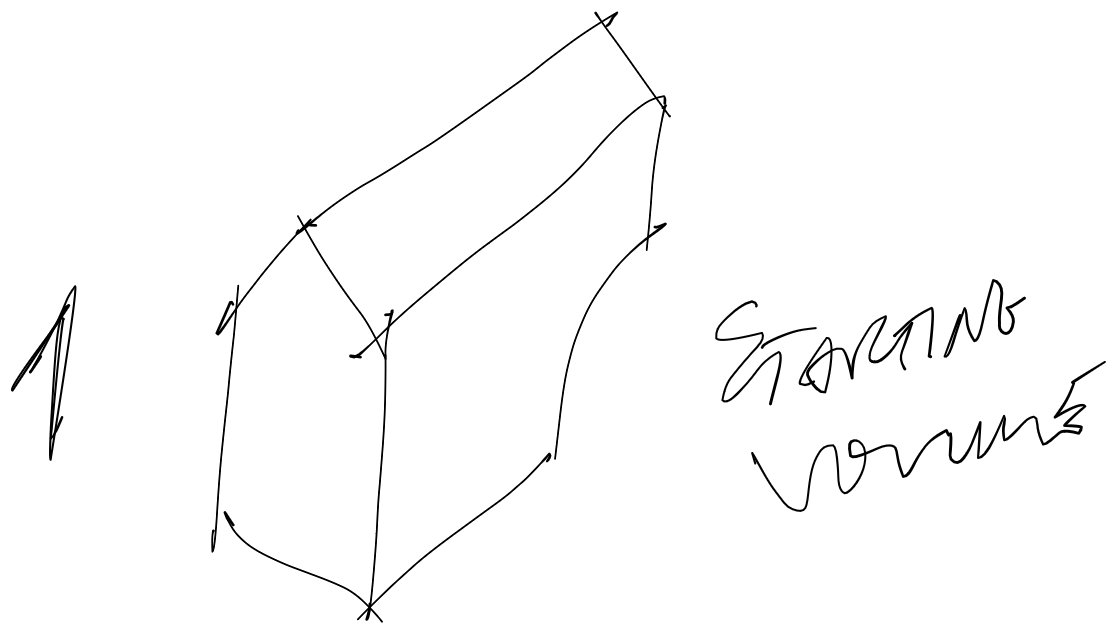

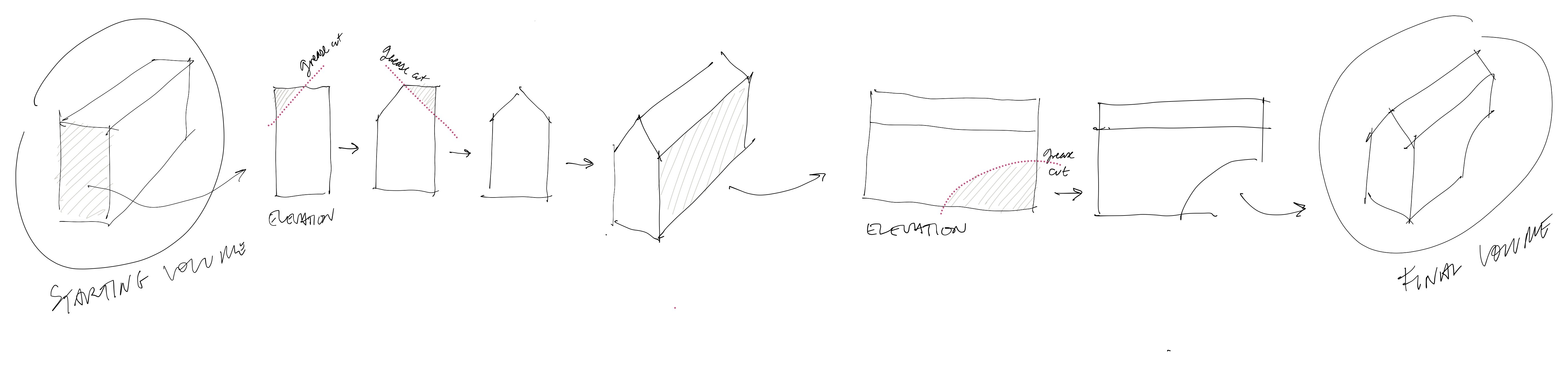

1 - START WITH A VOLUME

Imagine that you have a starting volume and not a plan view of a floor volume. You could have modeled in any way you wanted and I would also suggest a method to mass your volume with grease pencil, but let's leave that for the end.

You would now use Grease pencil on it. So you have an initial massing study. Something like this:

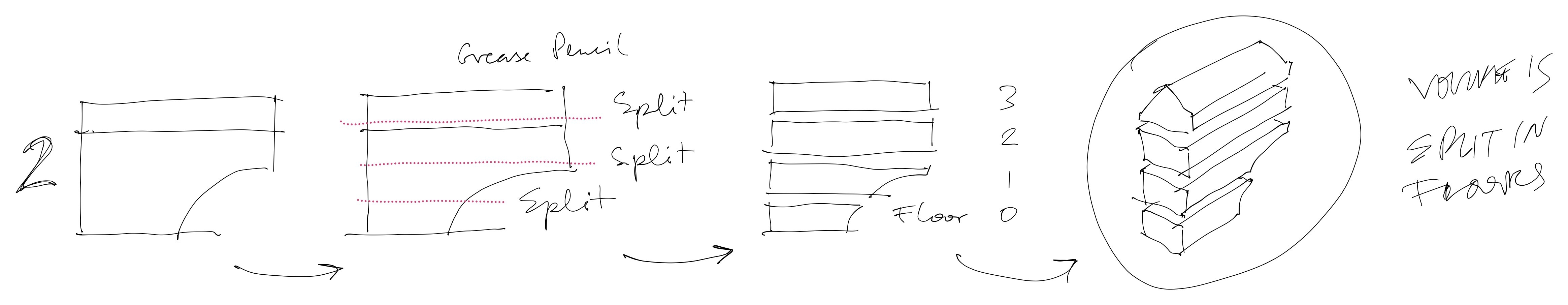

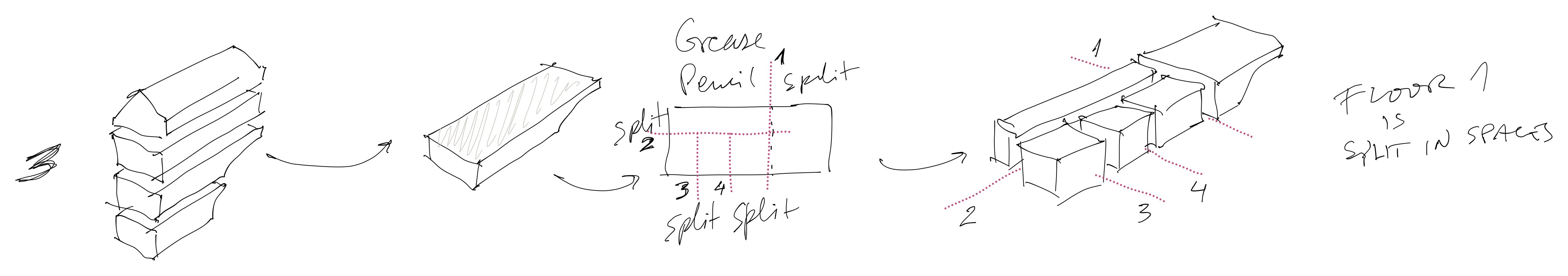

2 - SPLIT IT INTO FLOORS

Now we just look at it sideways and start splitting it in floors with the grease pencil, like this:

Like you did but for a building mass.

3 - PICK A FLOOR AND SPLIT IT INTO SPACES

Then, it would be a matter of defining which floor you would want to work with, isolating it and apply the same method to split it into spaces.

We would thus split the floor into spaces to create a base floor plan.

4 - COMMON FLOOR ELEMENTS

If you'd want to replicate this floor plan to all floors, you could.

If you'd just want to pick some of the elements and carry them to other floors, you also should be able to.

That would allow us to either define typical floors, or define elements in the building that should be carried over to other floors. When drawing those extra floors we would have a base to stick to, when sketching their spaces.

After defining common elements we could then be able to isolate each floor at a turn, have the replicated elements already in place, and be able to design all other spaces based on them.

5 - DEFINING FAÇADE, SLAB AND WALL THICKNESS

I honestly wouldn't bother with that. You're using Sverchok and Topologic is being adapted to it as we speak. It will probably be a matter of using your tool to sketch and having topologic doing the rest.

6 - GETTING BACK AT THE START

What if Grease Pencil could be used to sculpt the initial shape like this:

A couple thoughts: I absolutely agree with the spatial approach. It matches a common paradigm of how a lot of architects think. Not all architects, but certainly a lot. This approach and "BIM" is not mutually exclusive. Certainly the existing toolkit of proprietary BIM doesn't follow this approach, but in both the BlenderBIM Add-on and FreeCAD there are no restrictions, so it is good to reimagine our BIM workflows :) Very excited to see how this emerges into workflows that proceed from abstract to concrete, from macro to micro scales, etc.

A thought about greasepencil. I have a few reservations in its use in CAD documentation. Greasepencil is powerful, but I see it as an extension, not a foundation to build upon. The foundation needs to be something agnostic of Blender's Greasepencil, as architects do not work alone, and even use multiple programs. Documentation is an important legal contract where the data needs to be semantic and persistent, and documentation usually combines many 3D models and 2D overlays across many disciplines, especially on large projects. From a purely technical perspective, piping all of this through greasepencil is not very promising. So as long as there is a foundation in place (which quite a number of us are investigating), I would love to incorporate greasepencil as an extension. What this future looks like, I don't know :) Lots of work to do still!

I would say grease pencil is something that would make sense at the start of the project, while iterating for solutions.

After having split a solid into floors and a floor into spaces, geometry foundation is there and different approaches could help consolidate it. Identifying these spaces with topologic and populating them with IFC properties automatically with BlenderBIM's help would be feasible right?

How soon could that help us integrate BIM in a project? I think that holds potential.

After this was done, we could start using other tools that could create constraints on geometry, generate 2D symbology like structural axis or section lines and floor heights...

Then we could move on to higher details. At that point grease pencil could loose it's value as we might need very accurate tools, but I have some ideas on how it could further be used to populate openings, create complex structure concepts and models for hvac and plumbing and even electrical schematics.

Blender has many ways of modeling stuff and that can help it happen. On higher LOD blender's direct modeling tools will be needed but it's a continuous environment for doing everything.

I hope we can find a way to bind the required direct modeling to the initial geometry from grease pencil, topologic and constraints, so we could have some kind of non destructive modelling workflow.

That workflow would be the mantra for connecting concept to construction documentation and if that is possible grease pencil will always be useful as it is the base that can be refined without loosing it's raw potential.

We could think on increasing LOD as a stack of Blender modifiers. That stack would affect the base geometry created with grease pencil. Redraw the base, identify the cell complex, find the floors, spaces, staircases and elevators, and reapply the modifier stack on the new geometry.

Isn't that what Topologic is proposing and Topologise is trying to achieve?

Isn't that what geometric nodes and Sverchok allow?

And archipack can't it be integrated into Sverchok for this higher LOD?

As well as other projects around here?

Isn't all that bindable to BlenderBIM in order to create a structured IFC, extract 2D documentation and schedules?

And if we work with a Blender modifier stack for higher LOD can't we have simultaneously a model for documentation and presentation and a model for analysis?

If we find a way to bridge all of this wouldn't we be able to really change how we design. How great could that be for the whole world if that would be done in an open source environment...

We have all the tools to start with. I would love to discuss how to bind them.

Just for those reading through this thread who might've forgotten this thread, thought I'd bring it up :) Here is a fun example of mixing conceptual geometry with BIM: https://community.osarch.org/discussion/comment/1669

@Moult Do you know how the original model was created using grease pencil or was it modeled and then drawned upon it with grease pencil. I'm trying to understand how it works.

@JanF I've downloaded Blend file too and I see that once I draw an enclosed shape it auto extrudes the set plan height, if I keep drawing it splits it.

I think making a coesive toolset for this would be a matter of creating a series of Sverchok scripts to make things like boolean operations, bevels, splits, etc, and put them all in a toolbar.

Am I right?

Is this what you mean with:

@JanF said:

My current concept is actually to create a set of "modules" (if you are familiar with Darktable that kind of modules I mean) - each module acts independently and can be turned on or off (sort of like adjustment layers in Photoshop, now that I'm thinking about it), it doesn't change the original geometry, but takes it as a source and creates a modified copy, this way the whole thing stays completely parametric, the only data in the file is the original geometry, the modules used and their parameters.

Modules:

1. GP to lines - takes GP strokes and turns them into straight lines

2. Cleanup - takes straight lines and searches for lines with similar direction and colliding ends to turn them into a single line

3. Axes recognition - takes straight lines and turns them into straight lines with an appropriate axis as a parameter

4. Axes snapping - takes straight lines with axis parameter and turns them into lines parallel to one of the axes

5. Connections recognition - takes lines and turns them into lines with lines they are connected to as parameters

6. Trimming - takes lines and trims the ends that go over connected lines

7. Dimensioning - takes lines and moves them according to the dimension input

8. Connection keeper - takes lines with connected lines as parameters and extends/shortens them to keep the connections

9. adding openings - takes two sets of lines and creates objects on their intersections

As of now I covered all but 2, 7 and 8 in my demos, points 2 and 8 should be easy, 7 should be in principle also easy, but I have no idea how to make an UI for it.

Just tried it today and I'm officially in love with Grease Pencil...

I still don't dominate navigation as I miss touch input, but the pen glides on shapes like it's 3D paper.

I'm still too afraid to commit myself to start modeling in Blender, however I tried using these GP sketches further.

There are some issues that I came across:

GP are very innacurate in nature. That's why I like them for their original purpose, but that's probably the question that @JanF is trying to address on his experiments in order to achieve accurate modelling. If you want, I would love to share some ideas on how to address those questions in an intuitive way, as I've been trying to think about that in a user perspective. I can learn Sverchok as I go along.

I know GPs can be further edited or converted to curves or meshes, but at this point, for now, it's easier to use them as reference and create geometry with other modeling tools.

As modeling in Blender still isn't for me, I tried projecting these grease pencil strokes into a texture UV unwrapped into the model so I could export it and model it in my modeling app. This is not possible. GP's can't be baked into model's textures.

So I started using Texture Paint to texture. This works as it results in the texture being exportable along with the model.

Texture painting isn't as fluid as GP though. You can only paint into one object at the time and changing objects requires changing to object mode, selecting the next object and then go back to texture painting mode. We loose a lot of fluidity in the process and the experience is not as dynamic as applying grease pencil strokes on top of models.

Texture painting turns out to be something usable for me now as I can deal with it as something embeded in the actual model. It's like 3D paper. Sketching and iterating ideas at a conceptual stage could become very rewarding if minor workflow details would be improved in Blender. The idea that for a single model we can save several alternative textures and compare them just by changing them is very interesting for collaborating and discussing solutions.

As I have other tools for painting, I tested this on Substance Painter. SP allows painting through multiple objects and materials, or using materials with UV tiles. I could then paint on the building and the ground. In other scenarios I'd be able to paint on buildings composed of multiple objects easily. Here's a doodle I did: (I had some face normal issues that I didn't fix, so I don't show the ground here)

I exported the textures created in SP and imported them into Sketchup (my main modeling app). I then modeled based on what I textured, rendered it inside Sketchup with a Sketchy edge style (first time I used that in my life) and the final result is this:

I can see myself creating some presentations with this method already, so thank you for this discussion.

I think a similar method could be achieved exclusively with Blender if:

Grease Pencil's could be projected/baked into textures of a model. That would be perfect;

It would also be very interesting to have Blender's texture painting being able to work across multiple objects.

Using Grease Pencil with Sverchok for actually editing geometry is still a very interesting and valid idea and I will keep exploring how to use Grease Pencil Strokes to affect geometry, how they can be converted into accurate geometry on the fly or in a post process, with more control from the user and by imposing some geometric constraints to a geometry that was first created without that in mind.

It's also interesting to think when, in a practical workflow that should happen and how or for what purposes.

@Moult said:

A couple thoughts: I absolutely agree with the spatial approach. It matches a common paradigm of how a lot of architects think. Not all architects, but certainly a lot. This approach and "BIM" is not mutually exclusive. Certainly the existing toolkit of proprietary BIM doesn't follow this approach, but in both the BlenderBIM Add-on and FreeCAD there are no restrictions, so it is good to reimagine our BIM workflows :) Very excited to see how this emerges into workflows that proceed from abstract to concrete, from macro to micro scales, etc.

A thought about greasepencil. I have a few reservations in its use in CAD documentation. Greasepencil is powerful, but I see it as an extension, not a foundation to build upon. The foundation needs to be something agnostic of Blender's Greasepencil, as architects do not work alone, and even use multiple programs. Documentation is an important legal contract where the data needs to be semantic and persistent, and documentation usually combines many 3D models and 2D overlays across many disciplines, especially on large projects. From a purely technical perspective, piping all of this through greasepencil is not very promising. So as long as there is a foundation in place (which quite a number of us are investigating), I would love to incorporate greasepencil as an extension. What this future looks like, I don't know :) Lots of work to do still!

I think we need both approaches, being not mutually exclusive - barring developer / time constraints (which admittedly are considerable), as they say, you can ride a bicycle while chewing gum. While appreciating quite well the need to prioritize round tripping / interoperability we should also not lose sight of opportunities native to Blender that might not be immediately replicable or even applicable in other tools. Besides, if a feature or workflow that's unique to Blender becomes quite successful, and popular, it most likely will get copied in other tools, meaning these efforts don't have to solve all the problems for all the tools, but can inspire new and hopefully more nuanced approaches across board, without prejudice to the long game of interoperability. But yes, it's clearly a lot of work.

@JQL, apparently you can sketch on a proxy object like you've done and get a mesh out of it, in Blender, without having to project or bake textures. Editing the mesh is straight forward from that point, with basic knowledge of mesh handling and polygonal modelling in Blender, making it unnecessary to export out of Blender to get a final result. You can sketch on the proxy, make a mesh out of the sketch, edit the mesh, and sketch on it again:

I new that Grease Pencil could be converted to meshes (and the other way around). I was afraid of that as it meant learning a lot, but I will follow your lead. It might get interesting indeed.

I've tried it and it does hold potential. A lot. I just feel modelling in blender is not easy or nice. Too much eye balling and very hard to constrain geometry to the accuracy I want.

I'll keep pushing, slowly, possibilities are great.

Comments

Pinging @nikitron for the answer, I still haven't gotten to looking at blender plugins creation unfortunately.

For me the biggest issue now is the lack of standard for multilayered walls and parametric door/window objects in blender, (I'm not sure what archipack does, but the pro version is not os anyway) if we agree on something in these areas we can start developing a plugin.

For now you can use sverchok as addon itself (node tree can be represented in 3d window panel). But it needed refactoring for scripts to go from tree. It could be possible anyway.

@Moult could you please split the offtopic part of this thread into a new "grease pencil bim" topic? (starting roughly with my 7th September's post)

@JanF all done :) Would you like admin rights, by the way?

Great, thanks. And sure, I can keep an eye on the forum for a bit.

Done - have fun!

Next step in grease pencil sketching to model: Snapping to predefined axes (and generating volumes)

The user defines vectors of his axis directions, the reference lines find the closest one (angularly) and each wall snap to the direction assigned to it.

My current concept is actually to create a set of "modules" (if you are familiar with Darktable that kind of modules I mean) - each module acts independently and can be turned on or off (sort of like adjustment layers in Photoshop, now that I'm thinking about it), it doesn't change the original geometry, but takes it as a source and creates a modified copy, this way the whole thing stays completely parametric, the only data in the file is the original geometry, the modules used and their parameters.

Modules:

1. GP to lines - takes GP strokes and turns them into straight lines

2. Cleanup - takes straight lines and searches for lines with similar direction and colliding ends to turn them into a single line

3. Axes recognition - takes straight lines and turns them into straight lines with an appropriate axis as a parameter

4. Axes snapping - takes straight lines with axis parameter and turns them into lines parallel to one of the axes

5. Connections recognition - takes lines and turns them into lines with lines they are connected to as parameters

6. Trimming - takes lines and trims the ends that go over connected lines

7. Dimensioning - takes lines and moves them according to the dimension input

8. Connection keeper - takes lines with connected lines as parameters and extends/shortens them to keep the connections

9. adding openings - takes two sets of lines and creates objects on their intersections

As of now I covered all but 2, 7 and 8 in my demos, points 2 and 8 should be easy, 7 should be in principle also easy, but I have no idea how to make an UI for it.

Thoughts?

Btw as soon as I figure out how to link the objects to collections properly and create qto sets (as I mentioned here ) it will be also possible to autogenerate room tags for the volumes and export them directly as ifc volumes and 2D diagrams.

Fascinating!

Hi everybody, I think this feature can have great potential. But I wanted to share my consideration: in my experience it is necessary to draw by hand when I am designing a certain product when I need to think about how that thing should be. When I do this on paper, one thing that helps me a lot is the scale of representation. Each scale involves a certain degree of detail which helps to clarify. Furthermore, by drawing in scale you acquire automatisms that allow you to correctly represent what you have in mind. So I ask you would it be possible in the future to implement a function that allows you to view objects in the correct scale in the monitor? maybe even being able to lock it. For example: in an urban project I would be able to visually quantify the size of a certain portion of the lot (intuitively without use tools) and I could position elements such as trees of different sizes simply by sketch. It would also be very useful to just be able to superimpose gracepensil on the scale model. thanks to all of you for this space

Yes, this is important. I am not yet sure what the correct approach should be, as the most digital painting hardware is rather small and so it is usually necessary to pan/zoom a lot, the most fluent way I have achieved is using the left hand to pan/pitch zoom and the right one to sketch.

Anyway, it is kind of possible already in Blender, the default grid is metric and you can precisely control your zoom using the "N" panel's focal length for an orthographic view camera, so in my case I can set the focal length to 65,7mm and the grid becomes about 1cm on my display, so that makes it 1:100. (I found that out by measuring the grid with tape measure on my display, ehm)

We definitely need a user friendly way to do set this up and, as you said, perhaps also to lock the scale, a shortcut to switch the scales and so on. This all should not be too difficult to do with a plugin I think, except for getting the real world pixel size automatically, here I am a bit concerned, since my 100% in Photoshop or Corel were always off and I had to set them manually (but Acrobat reader gets it right so it can't be that hard).

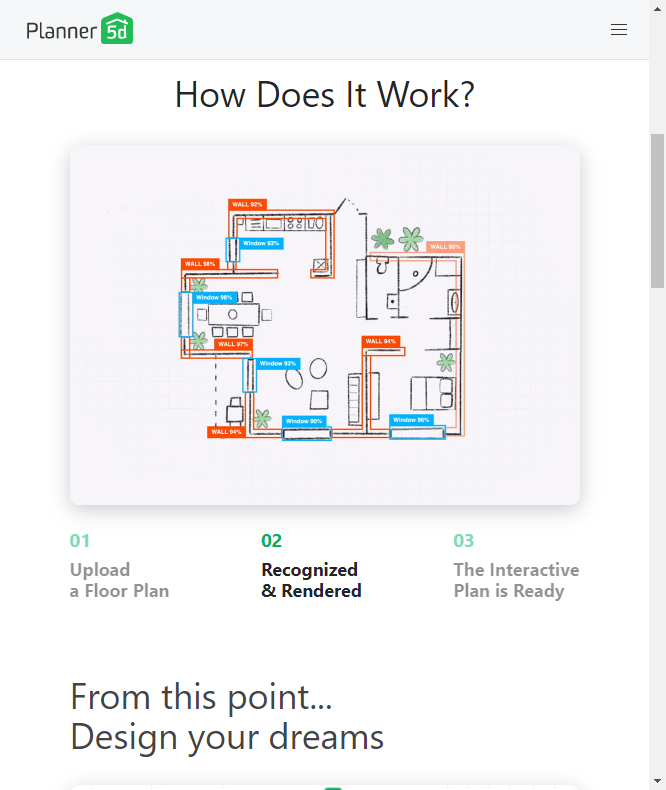

A similar approach is doing Planner 5D with Artificial Intelligence:

https://planner5d.com/ai/

I would actually expect something like this to be way more common nowadays. One way we could od this already is to use the vectorization in Inkscape, in my experience it works quite well. We could of course build a AI solution as well, I'm considering doing that for handwritten notes recognition. But there always will be issues with AI and online solutions, as discussed here: https://community.osarch.org/discussion/210/is-there-a-hidden-machine-learning-ai-competition#latest

@JanF would you mind sharing your blend or json file for your latest Greasepencil BIM modelling sverchok setup? I would love to play around with it. Thank you!

@Sinasta Made a repo just to feel cool:

https://github.com/JanFilipec/SketchyBIM

Let me know if you have any questions. One thing you are likely to experience, is that as you open the file and start adding strokes to an existing grease pencil layer the existing strokes disappear. I don't know why Blender does that, sorry.

These studies look really promising for quick sketches! Amazing!

My question is how easy is it to move a wall around afterwards? I saw that erasing the perpendicular strokes gets rid of doors and window, so curious how it works for walls.

Further on, it could be super cool to be able to draw some types of symbols inside of the space to add simple furniture representations - e.g. a small rectangle w/o crossing a wall ads a standard double bed (simple extrusion), a cross ads a chair, an oval a table, etc.

Will it be an overstatement to say Grease Pencil is the future?

Line art creation (not just rendering) now possible in Blender via Grease Pencil, and it will be in the main build, not an add-on, not a custom build of Blender. This should expose more things in the API that can be taken advantage of.

@JanF I think this further reinforces the possibilities of the sketch design workflow you've been experimenting with here with others. I've been thinking your Sverchok solution really needs to find convergence with @brunopostle's Homemaker + Topologise implementation in Blender.

Also, @Moult, @Andyrexic and @stephen_l, it seems this might be a worthy contender to solve the CAD documentation challenges we have with Blender. It will mean treating Grease Pencil like Layout is to Sketchup and Form Z Layout is to Form Z. MeasureItArch should be able to work on Grease Pencil objects on a dedicated layer.... yes, that's the beauty of the thing, Grease Pencil has layers, so a similar workflow to what people are used to in other tools can translate directly. Grease Pencil already has an edge because it is already so powerful for 2d sketching and illustration / digital painting type tasks (and of course 2D animation, the new interpolation feature when combined with the line art feature, for example would be very useful for creating assembly animation from 3d models), it will outclass other Layout solutions in other apps once keyed into the construction documentation (and design presentation) pipeline.

I can already see how Grease Pencil CAD layout templates can be created to store default settings, etc and maybe even customised to suit a BIM workflow. And that is where I see the potential magic in all this, not just creating interesting features, but developing a unique and seamless workflow from sketch design to massing and conceptual modelling, to construction documentation, all within Blender. Thanks you guys for doing all the hard work that keeps opening up all these possibilities.

Lines are "exportable" as dxf, svg, not certain of the state of grease pencil support in exporters at this time. However handling gp lines in svg export should not be an issue.

The point with documentation is more to find a way to provide vector based side views.

As using gp as draft, archipack "walls from curve" implement support for grease pencil.

>

>

I have some :)

I love the generate rooms approach. I think that's THE approach.

Generating rooms into a volume as you are addressing in top view, is exactly what we do when we sketch. When we sketch we don't define walls, but we do define boundaries of spaces, or we divide an overall shape into smaller spaces, or we draw some lines to extend current shapes...

That's why usual BIM software workflow is the wrong approach and sketching is the right approach for idea exploration. BIM focuses on walls and in every example people already know what to model. I think you we are still attached to that wall and window approach when we have so much more in architecture. If you stick to it you're just doing the same with a nicer UI.

Sketching focuses on splitting and connecting spaces and freedom of lines for representing anything. We expand ideas freely without thinking exactly what will it actually become in the end. It might be a wall, a screen or a line in the ground. Therefore BIM comes later as you design a wall, not an abstraction of a boundary that you don't know what it is yet. However, if the gap between BIM and sketching is not there then that would be great.

Your plan sketch and splitting into spaces is, therefore, more inline with the root of how we think, imho.

However, grease pencil on SketchyBIM should quickly dettach itself from an exclusively plan sketching workflow and think in the broader scope as soon as possible.

Having said that, this particular experiment you have, could be adapted to the whole process. What you have done here is the answer for a lot of things. Actually, for me, this is the starting point for a full architectural workflow using grease pencil.

1 - START WITH A VOLUME

Imagine that you have a starting volume and not a plan view of a floor volume. You could have modeled in any way you wanted and I would also suggest a method to mass your volume with grease pencil, but let's leave that for the end.

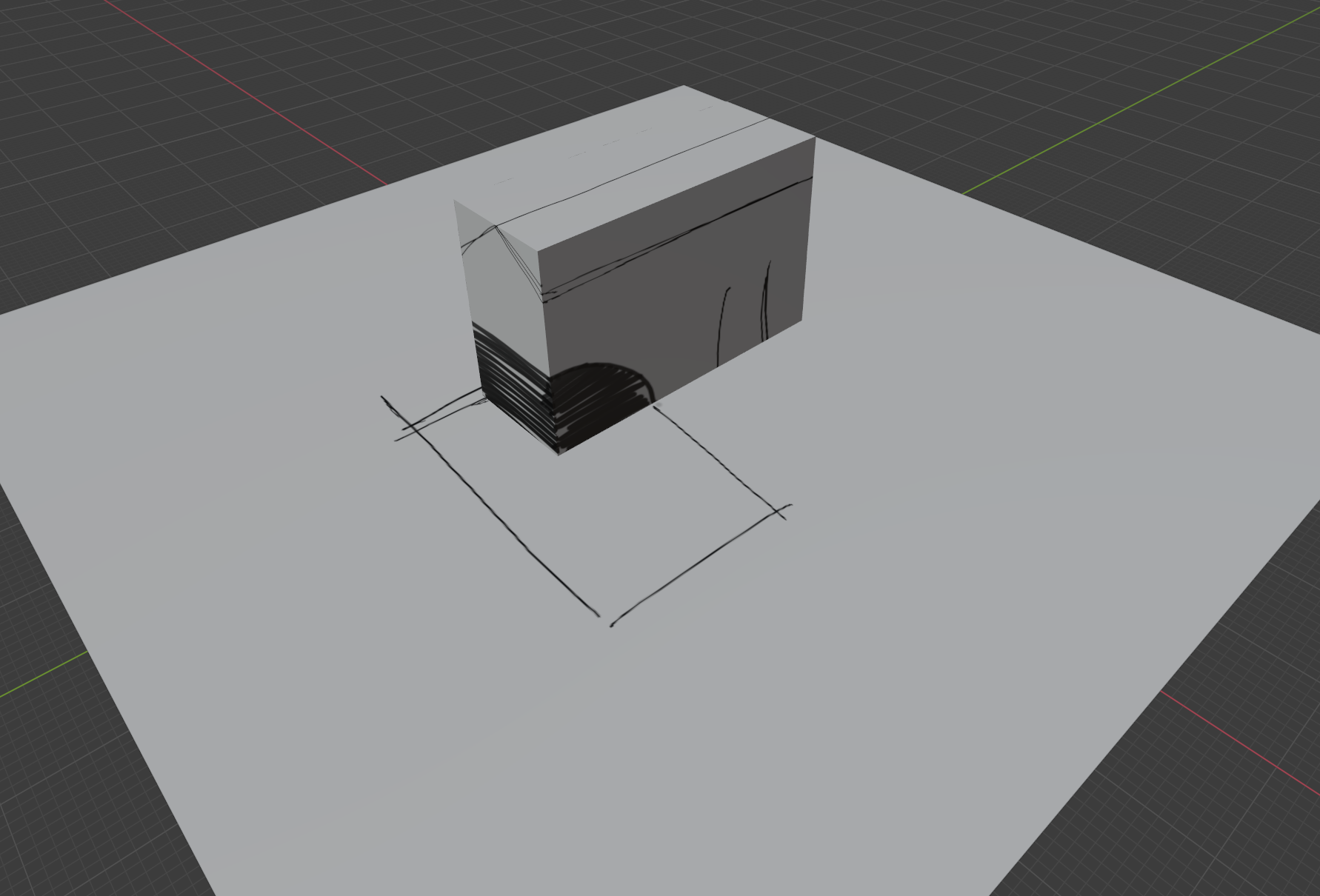

You would now use Grease pencil on it. So you have an initial massing study. Something like this:

2 - SPLIT IT INTO FLOORS

Now we just look at it sideways and start splitting it in floors with the grease pencil, like this:

Like you did but for a building mass.

3 - PICK A FLOOR AND SPLIT IT INTO SPACES

Then, it would be a matter of defining which floor you would want to work with, isolating it and apply the same method to split it into spaces.

We would thus split the floor into spaces to create a base floor plan.

4 - COMMON FLOOR ELEMENTS

If you'd want to replicate this floor plan to all floors, you could.

If you'd just want to pick some of the elements and carry them to other floors, you also should be able to.

That would allow us to either define typical floors, or define elements in the building that should be carried over to other floors. When drawing those extra floors we would have a base to stick to, when sketching their spaces.

After defining common elements we could then be able to isolate each floor at a turn, have the replicated elements already in place, and be able to design all other spaces based on them.

5 - DEFINING FAÇADE, SLAB AND WALL THICKNESS

I honestly wouldn't bother with that. You're using Sverchok and Topologic is being adapted to it as we speak. It will probably be a matter of using your tool to sketch and having topologic doing the rest.

6 - GETTING BACK AT THE START

What if Grease Pencil could be used to sculpt the initial shape like this:

A perfect world :)

A couple thoughts: I absolutely agree with the spatial approach. It matches a common paradigm of how a lot of architects think. Not all architects, but certainly a lot. This approach and "BIM" is not mutually exclusive. Certainly the existing toolkit of proprietary BIM doesn't follow this approach, but in both the BlenderBIM Add-on and FreeCAD there are no restrictions, so it is good to reimagine our BIM workflows :) Very excited to see how this emerges into workflows that proceed from abstract to concrete, from macro to micro scales, etc.

A thought about greasepencil. I have a few reservations in its use in CAD documentation. Greasepencil is powerful, but I see it as an extension, not a foundation to build upon. The foundation needs to be something agnostic of Blender's Greasepencil, as architects do not work alone, and even use multiple programs. Documentation is an important legal contract where the data needs to be semantic and persistent, and documentation usually combines many 3D models and 2D overlays across many disciplines, especially on large projects. From a purely technical perspective, piping all of this through greasepencil is not very promising. So as long as there is a foundation in place (which quite a number of us are investigating), I would love to incorporate greasepencil as an extension. What this future looks like, I don't know :) Lots of work to do still!

( I just enjoy looking at the sketches in this thread )

I would say grease pencil is something that would make sense at the start of the project, while iterating for solutions.

After having split a solid into floors and a floor into spaces, geometry foundation is there and different approaches could help consolidate it. Identifying these spaces with topologic and populating them with IFC properties automatically with BlenderBIM's help would be feasible right?

How soon could that help us integrate BIM in a project? I think that holds potential.

After this was done, we could start using other tools that could create constraints on geometry, generate 2D symbology like structural axis or section lines and floor heights...

Then we could move on to higher details. At that point grease pencil could loose it's value as we might need very accurate tools, but I have some ideas on how it could further be used to populate openings, create complex structure concepts and models for hvac and plumbing and even electrical schematics.

Blender has many ways of modeling stuff and that can help it happen. On higher LOD blender's direct modeling tools will be needed but it's a continuous environment for doing everything.

I hope we can find a way to bind the required direct modeling to the initial geometry from grease pencil, topologic and constraints, so we could have some kind of non destructive modelling workflow.

That workflow would be the mantra for connecting concept to construction documentation and if that is possible grease pencil will always be useful as it is the base that can be refined without loosing it's raw potential.

We could think on increasing LOD as a stack of Blender modifiers. That stack would affect the base geometry created with grease pencil. Redraw the base, identify the cell complex, find the floors, spaces, staircases and elevators, and reapply the modifier stack on the new geometry.

Isn't that what Topologic is proposing and Topologise is trying to achieve?

Isn't that what geometric nodes and Sverchok allow?

And archipack can't it be integrated into Sverchok for this higher LOD?

As well as other projects around here?

Isn't all that bindable to BlenderBIM in order to create a structured IFC, extract 2D documentation and schedules?

And if we work with a Blender modifier stack for higher LOD can't we have simultaneously a model for documentation and presentation and a model for analysis?

If we find a way to bridge all of this wouldn't we be able to really change how we design. How great could that be for the whole world if that would be done in an open source environment...

We have all the tools to start with. I would love to discuss how to bind them.

Just for those reading through this thread who might've forgotten this thread, thought I'd bring it up :) Here is a fun example of mixing conceptual geometry with BIM: https://community.osarch.org/discussion/comment/1669

@Moult Do you know how the original model was created using grease pencil or was it modeled and then drawned upon it with grease pencil. I'm trying to understand how it works.

@JanF I've downloaded Blend file too and I see that once I draw an enclosed shape it auto extrudes the set plan height, if I keep drawing it splits it.

I think making a coesive toolset for this would be a matter of creating a series of Sverchok scripts to make things like boolean operations, bevels, splits, etc, and put them all in a toolbar.

Am I right?

Is this what you mean with:

Just tried it today and I'm officially in love with Grease Pencil...

I still don't dominate navigation as I miss touch input, but the pen glides on shapes like it's 3D paper.

I'm still too afraid to commit myself to start modeling in Blender, however I tried using these GP sketches further.

There are some issues that I came across:

So I started using Texture Paint to texture. This works as it results in the texture being exportable along with the model.

Texture painting isn't as fluid as GP though. You can only paint into one object at the time and changing objects requires changing to object mode, selecting the next object and then go back to texture painting mode. We loose a lot of fluidity in the process and the experience is not as dynamic as applying grease pencil strokes on top of models.

As I have other tools for painting, I tested this on Substance Painter. SP allows painting through multiple objects and materials, or using materials with UV tiles. I could then paint on the building and the ground. In other scenarios I'd be able to paint on buildings composed of multiple objects easily. Here's a doodle I did: (I had some face normal issues that I didn't fix, so I don't show the ground here)

I exported the textures created in SP and imported them into Sketchup (my main modeling app). I then modeled based on what I textured, rendered it inside Sketchup with a Sketchy edge style (first time I used that in my life) and the final result is this:

I can see myself creating some presentations with this method already, so thank you for this discussion.

I think a similar method could be achieved exclusively with Blender if:

Using Grease Pencil with Sverchok for actually editing geometry is still a very interesting and valid idea and I will keep exploring how to use Grease Pencil Strokes to affect geometry, how they can be converted into accurate geometry on the fly or in a post process, with more control from the user and by imposing some geometric constraints to a geometry that was first created without that in mind.

It's also interesting to think when, in a practical workflow that should happen and how or for what purposes.

I'd love to discuss this further.

I think we need both approaches, being not mutually exclusive - barring developer / time constraints (which admittedly are considerable), as they say, you can ride a bicycle while chewing gum. While appreciating quite well the need to prioritize round tripping / interoperability we should also not lose sight of opportunities native to Blender that might not be immediately replicable or even applicable in other tools. Besides, if a feature or workflow that's unique to Blender becomes quite successful, and popular, it most likely will get copied in other tools, meaning these efforts don't have to solve all the problems for all the tools, but can inspire new and hopefully more nuanced approaches across board, without prejudice to the long game of interoperability. But yes, it's clearly a lot of work.

@JQL, apparently you can sketch on a proxy object like you've done and get a mesh out of it, in Blender, without having to project or bake textures. Editing the mesh is straight forward from that point, with basic knowledge of mesh handling and polygonal modelling in Blender, making it unnecessary to export out of Blender to get a final result. You can sketch on the proxy, make a mesh out of the sketch, edit the mesh, and sketch on it again:

I new that Grease Pencil could be converted to meshes (and the other way around). I was afraid of that as it meant learning a lot, but I will follow your lead. It might get interesting indeed.

Thanks!

I've tried it and it does hold potential. A lot. I just feel modelling in blender is not easy or nice. Too much eye balling and very hard to constrain geometry to the accuracy I want.

I'll keep pushing, slowly, possibilities are great.